Could Autonomy Certificates Enable AI Accountability?

A new idea for documenting levels of autonomy in AI agents could also be a boon for accountability

A new essay published last week by the Knight First Amendment Institute proposes that AI agents should be rated on their level of autonomy by a third-party governing body [1]. The authors argue that such “autonomy certificates” would act as a form of digital documentation that could be useful in risk assessments, the design of safety frameworks, and in engineering. But I also think they could be a beneficial idea for supporting AI accountability.

The autonomy of an agent is defined in the paper as “the extent to which an AI agent is designed to operate without user involvement.” Essentially, it’s how much the agent can do on its own without interacting with a user. The level of autonomy of an agent is an intentional design decision—for instance, engineers may define the tools an agent can use and the scope of its perception of its environment.

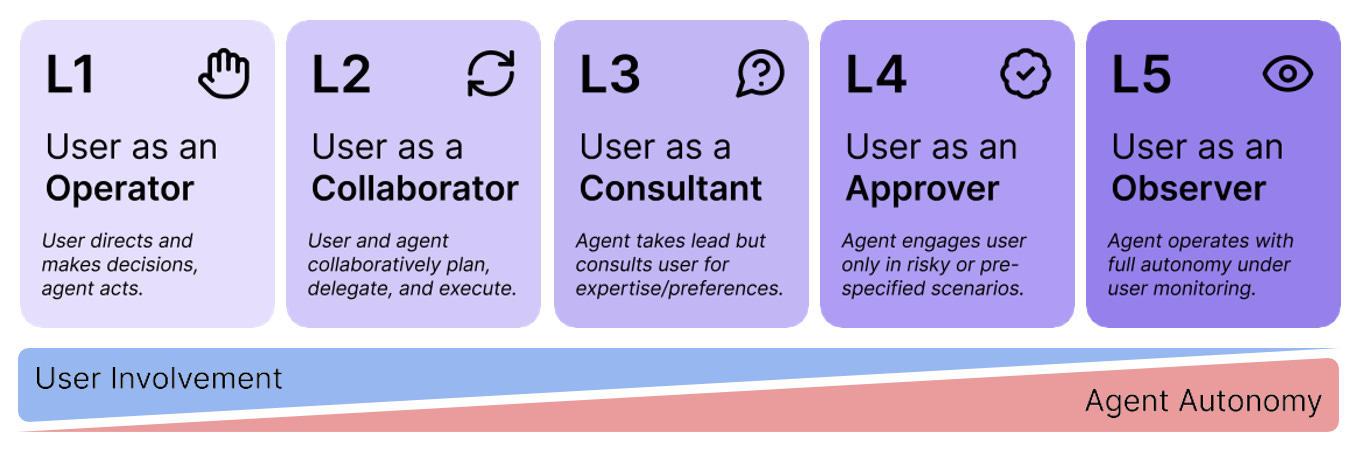

Various levels of autonomy are articulated in the paper, ranging from level 1 where the user is an operator that drives much of the decision-making, to level 5 where the user is an observer that has no capacity for involvement in the agent’s decisions or actions. In between are level 2 (user as collaborator), level 3 (user as consultant), and level 4 (user as approver).

Autonomy certificates prescribe “the maximum level of autonomy at which an agent can operate given 1) some set of technical specifications that define the agent’s capability (e.g. AI model, prompts, tools), and 2) its operational environment.” As such they essentially define an authorized standard for how much an agent is allowed to do within some context. Providing an expectation for behavior is their main benefit in supporting AI accountability since such standards of behavior need to be established in order to trigger accountability proceedings.

For example, if an agent is rated as level 3, but begins acting at what the certificate standard defines as level 4, this might trigger a call for accountability. This could involve the provider of the AI agent needing to explain to the third party certification body why or how that may have occurred, and with the sanction being that the autonomy certificate may be revoked or reissued at a different level. The autonomy certificate could thus act as a standard for helping to ensure that AI agents only operate at the level of autonomy that they’ve been certified for.

Another dimension of accountability that autonomy certificates would support is in outlining the behaviors of the system that need to be monitored. If an AI agent is scoped as being able to use a certain set of tools autonomously (i.e. without user intervention) then this creates an additional need for logging of that tool use. Likewise, for systems rated at lower levels of autonomy (and higher levels of user involvement), the certificate might indicate the kinds of user behaviors that need to be logged. All this logging could then support explanations as part of accountability proceedings if the AI agent was observed misusing a tool, or a user was found to be approving harmful actions that the AI agent suggested.

The authors suggest that autonomy certificates would be produced through a third-party evaluation process that systematically tests an AI agent to identify the “minimum level of user involvement needed for the agent to exceed a certain accuracy or pass rate threshold” on a given benchmark task. They would also need to be updated as systems are updated, such as when new models are released). As such they would need a fair bit of expert human attention, and thus resources, in order to produce. But the benefits to accountability could be meaningful.

References

[1] Feng, K. J. K., McDonald, D. W. & Zhang, A. X. Levels of Autonomy for AI Agents. Knight First Amendment Institute. July, 2025. https://knightcolumbia.org/content/levels-of-autonomy-for-ai-agents-1